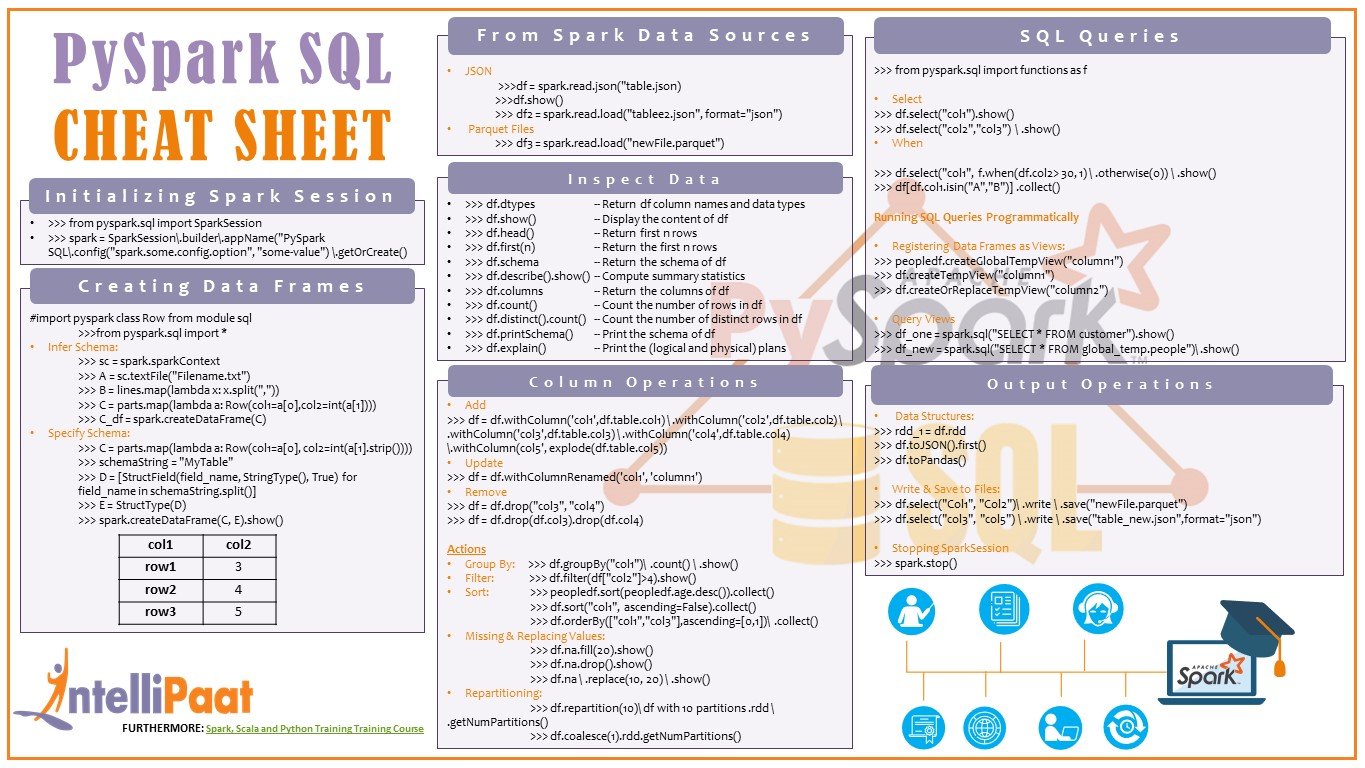

PySparkSQLCheatSheetPython Created Date: 8/9/2017 2:57:52 PM. PySpark – SQL Article FEATURED Free Cheat Sheet. I have been gathering all the cheat sheets for Python, Machine Learning, and Data Science. Sep 18, 2020 - This PySpark SQL Cheat Sheet is a quick guide to learn PySpark SQL, its Keywords, Variables, Syntax, DataFrames, SQL queries, etc. Download PySpark Cheat Sheet PDF now. Pyspark คืออะไร. Pyspark เป็นเครื่องมือหนึ่งที่เกิดจากการรวมตัวกันระหว่าง Apache Spark กับ Python ซึ่งทำให้เราสามารถเขียน Python ใน Spark ได้ หรือเรียกง่ายๆก็คือเป็น Python API.

- Pyspark Sql Cheat Sheet Free

- Pyspark Sql Cheat Sheet

- Pyspark Sql Cheat Sheet Pdf

- Pyspark Sql Cheat Sheet Excel

By Karlijn Willems, DataCamp.

Big Data With Python

Big data is everywhere and is traditionally characterized by three V’s: Velocity, Variety and Volume. Big data is fast, is varied and has a huge volume. As a data scientist, data engineer, data architect, ... or whatever the role is that you’ll assume in the data science industry, you’ll definitely get in touch with big data sooner or later, as companies now gather an enormous amount of data across the board.

Data doesn’t always mean information, though, and that is where you, data science enthusiast, come in. You can make use of Apache Spark, “a fast and general engine for large-scale data processing” to start to tackle the challenges that big data poses to you and the company you’re working for.

Pyspark Sql Cheat Sheet Free

Nevertheless, doubts may always arise when you’re working with Spark and when they do, take a look at DataCamp’s Apache Spark Tutorial: ML with PySpark tutorial or download the cheat sheet for free!

In what follows, we’ll dive deeper into the structure and the contents of the cheat sheet.

PySpark Cheat Sheet

PySpark is the Spark Python API exposes the Spark programming model to Python. Spark SQL, then, is a module of PySpark that allows you to work with structured data in the form of DataFrames. This stands in contrast to RDDs, which are typically used to work with unstructured data.

Tip: if you want to learn more about the differences between RDDs and DataFrames, but also about how Spark DataFrames differ from pandas DataFrames, you should definitely check out the Apache Spark in Python: Beginner's Guide.

Initializing SparkSession

If you want to start working with Spark SQL with PySpark, you’ll need to start a SparkSession first: you can use this to create DataFrames, register DataFrames as tables, execute SQL over the tables and read parquet files. Don’t worry if all of this sounds very new to you - You’ll read more about this later on in this article!

You start a SparkSession by first importing it from the sql module that comes with the pyspark package. Next, you can initialize a variable spark, for example, to not only build the SparkSession, but also give the application a name, set the config and then use the getOrCreate() method to either get a SparkSession if there is already one running or to create one if that isn’t the case yet! That last method will come in extremely handy, especially for future reference, because it will prevent you from running multiple SparkSessions at the same time!

Cool, isn’t it?

Inspecting the Data

When you have imported your data, it’s time to inspect the Spark DataFrame by using some of the built-in attributes and methods. This way, you’ll get to know your data a little bit better before you start manipulating the DataFrames.

Now, you’ll probably already know most of the methods and attributes mentioned in this section of the cheat sheet from working with pandas DataFrames or NumPy, such as dtypes, head(), describe(), count(),... There are also some methods that might be new to you, such as the take() or printSchema() method, or the schema attribute.

Nevertheless, you’ll see that the ramp-up is quite modest, as you can leverage your previous knowledge on data science packages maximally.

Duplicate Values

Pyspark Sql Cheat Sheet

As you’re inspecting your data, you might find that there are some duplicate values. To remediate this, you can use the dropDuplicates() method, for example, to drop duplicate values in your Spark DataFrame.

Queries

You might remember that one of the main reasons for using Spark DataFrames in the first place is that you have a more structured way of dealing with your data - Querying is one of those examples of handling your data in a more structured way, whether you’re using SQL in a relational database, SQL-like language in No-SQL databases, the query() method on Pandas DataFrames, and so on.

Now that we’re talking about Pandas DataFrames, you’ll notice that Spark DataFrames follow a similar principle: you’re using methods to get to know your data better. In this case, though, you won’t use query(). Instead, you’ll have to make use of other methods to get the results you want to get back: in a first instance, select() and show() will be your friends whenever you want to retrieve information from your DataFrames.

Within these methods, you can build up your query. As with standard SQL, you specify what columns you exactly want to get back within select(). You can do this with a regular string or by specifying the column name with the help of your DataFrame itself, as in the following example: df.lastName or df[“firstName”].

Note that the latter approach will give you some more freedom in some cases where you want to specify what information you exactly want to retrieve with additional functions such as isin() or startswith(), for example.

Besides select(), you can also make use of the functions module in PySpark SQL to specify, for example, a when clause in your query, as demonstrated in the table below:

All in all, you can see that there are a lot of possibilities for those who want to handle and get to know their data to a larger extent with the help of select(), help() and the functions of the functions module.

Add, Update & Remove Columns

Pyspark Sql Cheat Sheet Pdf

You might also want to look into adding, updating or removing some columns from your Spark DataFrame. You can easily do this with the withColumn(), withColumnRenamed() and drop() methods. You’ll probably know by now that you also have a drop() method at your disposal when you’re working with Pandas DataFrames. You can read more here.

Pyspark Sql Cheat Sheet Excel

GroupBy

Again, just like with Pandas DataFrames, you might want to group by certain values and aggregate some values - The example below shows how you use the groupBy() method, in combination with count() and show() to retrieve and show your data, grouped by age, together with the number of people who have that certain age.